DeepMind’s First Medical Research Gig Will Use AI to Diagnose Eye Disease

DeepMind’s First Medical Research Gig Will Use AI to Diagnose Eye Disease

Google’s machine learning division plans to help doctors spot the early signs of visual degeneration by sifting through a million eye scans.

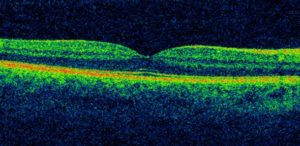

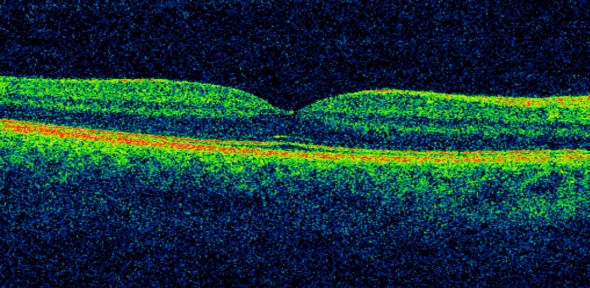

Every week, Moorfields Eye Hospital in London performs 3,000 optical coherence tomography scans to diagnose vision problems. The scans, which use scattered light to create high-resolution 3-D images of the retina, produce large quantities of data. Analyzing that data is a slow process. Understanding the images requires trained and experienced human eyes to identify problems specific to each case, leaving little or no time to identify broader, population-wide trends that could make early detection easier.

That’s just the kind of task that artificial intelligence can be used to tackle, though. So it’s perhaps not surprising that Google’s AI wing, DeepMind, has decided to partner with the hospital to apply machine learning to the problem as part of its health program. The arrangement will see DeepMind’s software study over a million eye scans—both optical coherence and more conventional images of the retina—in order to establish what happens in the eye during the early stages of eye disease.

The work will initially focus on identifying how to automatically diagnose visual problems brought about by diabetes and age-related macular degeneration. Diabetes sufferers are 25 times more likely to suffer some kind of sight loss than those without it, and age-related macular degeneration is the most common cause of blindness in the U.K. In both cases, early detection can allow for more effective treatment.

An image of the retina.

An image of the retina.

Because the the project is new and will be using machine learning to identify patterns in the data that may not be readily identifiable to human eyes, there are few precise details about how the technique will work. DeepMind does say, though, that it plans to spot the early signs of visual degeneration in order to provide practitioners with more time to intervene.

It’s the first health project taken on by DeepMind that is purely research-based. An earlier collaboration, with the Royal Free hospital in north London, saw the organization agree to develop a smartphone app called Streams to monitor patients with kidney disease. A report by New Scientist, however, suggested with some concern that the project freely offered up 1.6 million patient records to DeepMind.

The eye-scan data provided by Moorfields will be anonymized and historic. DeepMind claims that it will be impossible to identify a patient from the records, and that its findings “may be used to improve future care, [but] won’t affect the care any patient receives today.” The Royal Free incident, it seems, may have inspired DeepMind to tread cautiously this time around.

It’s not the first attempt to apply deep learning to health care. IBM’s Watson supercomputer, for instance, is currently drawing on 600,000 medical evidence reports and 1.5 million patient records and clinical trials to help doctors develop better treatment plans for cancer patients. Meanwhile U.K.-based startup Babylon is developing software that takes symptoms from a user in order to suggest a course of action.

Those, however, are much broader problems where robust solutions may take a long time to emerge. Given such a well-defined task, DeepMind should be able to develop an AI that can inspect those 3,000 weekly scans to help clinicians spot warning signs of eye disease before they cause real trouble. Let’s see if it works.

(Read more: Google, New Scientist, “The Artificially Intelligent Doctor Will Hear You Now”)

Leave a Reply