Algorithm Clones Van Gogh’s Artistic Style and Pastes It onto Other Images, Movies

The nature of artistic style is something of a mystery to most people. Think of Vincent Van Gogh’s Starry Night, Picasso’s work on cubism, or Edvard Munch’s The Scream. All have a powerful, unique style that humans recognize easily.

But what of machines? Deep neural networks are revolutionizing the way machines recognize and interpret the world. Machine vision now routinely outperforms humans at tasks such as object and face recognition, something that was unimaginable just a few years ago.

Recently, these devices have taken the first tentative steps toward recognizing artistic style and even reproducing it. Just how far this kind of work can go hasn’t been clear. For example, is it possible to copy and paste an artistic style from one image onto an entire video, without producing artefacts that ruin the visual experience?

Today, Manuel Ruder and pals at the University if Freiburg in Germany show that exactly this is possible. These guys take famous works of art such as Starry Night and The Scream and transfer their style to a range of video sequences taken from movies such as Ice Age and TV programs such as Miss Marple. The result is an impressive rerendering of videos and the possibility of doing it in almost any style imaginable.

Deep neural networks consist of many layers that each extract information from an image then pass on the leftover data to the next layer. The first layers extract broad patterns such as color and the deeper layers extract progressively more detail, which allow object recognition.

The information extracted by the deeper layers is important. It is essentially the content of an image minus the contextual information such as color, texture, and so on. In a way, it is the computer equivalent of a line drawing.

Last year, Leon Gatys at the University of Tubingen and a few pals began studying artistic style in this way. They discovered that it is possible to capture artistic style by looking not at the information in each layer but at the correlations between layers. So the way an artist reproduces a face is correlated with the way he or she reproduces a tree or a house or the sun. Capturing this correlation also captures the style.

But their key discovery was that the content of an image can be completely separated from the artistic style. What’s more, they found they could take this artistic style and copy and paste it onto the content of any other image.

All of a sudden it becomes possible to capture the abstract style of a Kandinsky and paste it onto a picture of your cat. That’s a lot of fun. But it also raised the question of how much further this technique can be taken.

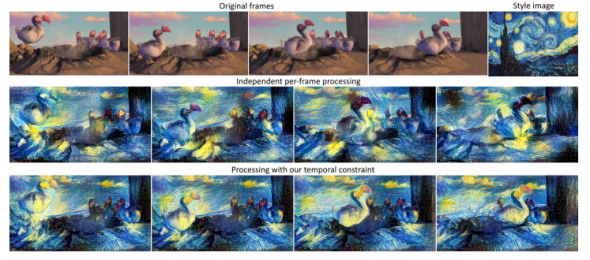

The obvious next step is to paste the artistic style onto successive images to make a video. But that immediately causes problems. Small differences between successive frames can lead to big differences in the way the new artistic style is applied. And that makes the video appear jumpy and visually incoherent. A particular problem is the edges of objects as they move or become occluded.

Now Ruder and co have solved this problem. “Given an artistic image, we transfer its particular style of painting to the entire video,” they say.

Their approach is to use an algorithm that analyzes the deviations between successive processed frames and prevents big differences but to do this while ignoring areas of a scene coming into view after being occluded. “This allows the process to rebuild disoccluded regions and distorted motion boundaries while preserving the appearance of the rest of the image,” they say.

The results are impressive. The team uses its algorithm to extract the artistic style of a number of different works of art by Kandinsky, Picasso, Matisse, Turner, and of course Munch and Van Gogh.

They processed each still in the sequences at a resolution of 1024 x 436 on an Nvidia Titan X GPU with a parallel processing on a CPU at the same time. This process takes about eight minutes per frame to start with. But after optimization this drops to an average of about three minutes per frame. So the entire process is computationally intensive.

The best way to appreciate the result is to watch the video, an impressive collection of sequences from a wide range of films, computer animations, and TV programs.

Of course, there are still improvements that can be made. The algorithm struggles with very rapid or large movements between frames and it should be possible to further optimize the process to reduce the computation time. But none of these problems look like showstoppers.

The work raises an interesting question of how much further this technique can be taken. For example, it’s easy to imagine apps that could do this in the cloud as you film on your smartphone. But would it also be possible to paste the style of The Scream into three dimensions and beyond that into a virtual reality? It’s hard to come up with reasons why not.

All this heralds an entirely new approach to filmmaking but also to art. When artistic style becomes a commodity that can be cut and pasted from one image to another, what does that mean for the work of artists? It’s not hard to imagine how these styles could be edited or even combined to produce hybrids.

Anyone fancy a Rubens-Picasso, a Warhol-Monet, or a Burton-Bergman?

Ref: arxiv.org/abs/1604.08610: Artistic Style Transfer for Videos

Leave a Reply