Why a Chip That’s Bad at Math Can Help Computers Tackle Harder Problems

Why a Chip That’s Bad at Math Can Help Computers Tackle Harder Problems

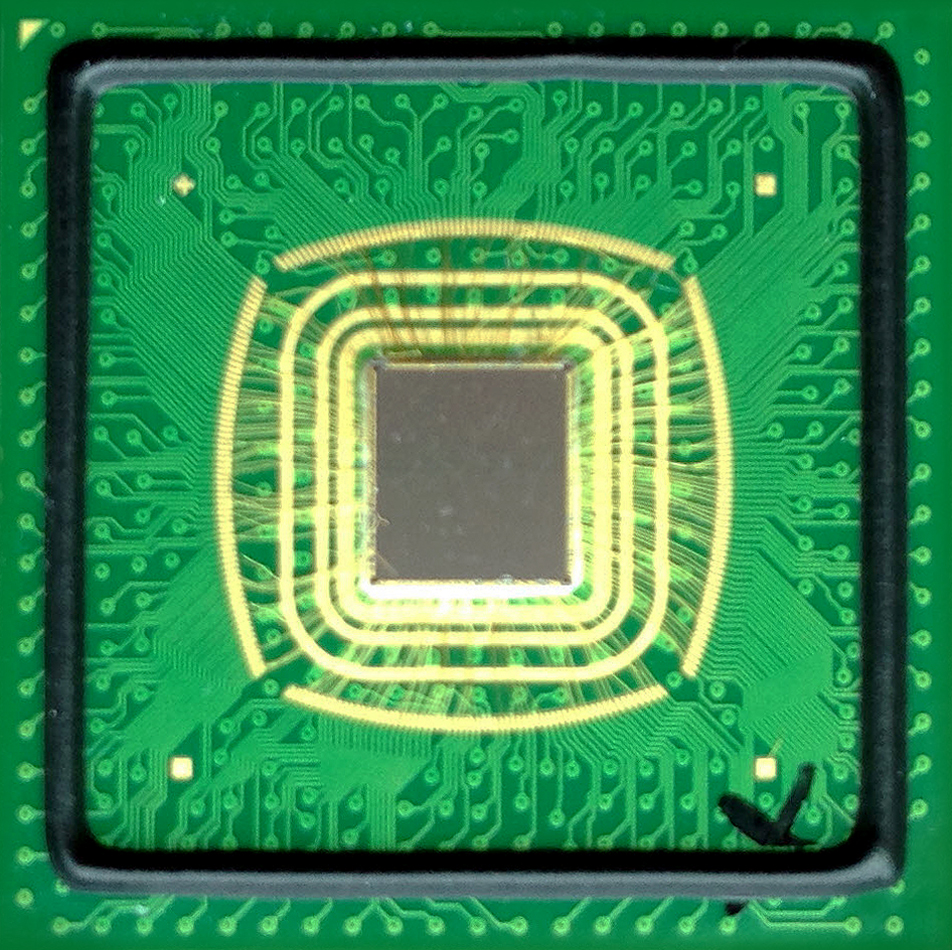

DARPA funded the development of a new computer chip that’s hardwired to make simple mistakes but can help computers understand the world.

Your math teacher lied to you. Sometimes getting your sums wrong is a good thing.

So says Joseph Bates, cofounder and CEO of Singular Computing, a company whose computer chips are hardwired to be incapable of performing mathematical calculations correctly. Ask it to add 1 and 1 and you will get answers like 2.01 or 1.98.

Pentagon research agency DARPA funded the creation of Singular’s chip because that fuzziness can be an asset when it comes to some of the hardest problems for computers, such as making sense of video or other messy real world data. “Just because the hardware is sucky doesn’t mean the software’s result has to be,” says Bates.

A chip that can’t guarantee that every calculation is perfect can still get good results on many problems but needs fewer circuits and burns less energy, he says.

Bates has worked with Sandia National Lab, Carnegie Mellon University, the Office of Naval Research, and MIT on tests that used simulations to show how the S1 chip’s inexact operations might make certain tricky computing tasks more efficient. Problems with data that comes with built-in noise from the real world, or where some approximation is needed are the best fits. Bates reports promising results for applications such as high-resolution radar imaging, extracting 3-D information from stereo photos, and a technique called deep learning that has delivered a recent burst of progress in artificial intelligence.

This chip can’t get its arithmetic right, but could make computers more efficient at tricky problems like analyzing images.

This chip can’t get its arithmetic right, but could make computers more efficient at tricky problems like analyzing images.

In a simulated test using software that tracks objects such as cars in video, Singular’s approach was capable of processing frames almost 100 times faster — while using less than 2 percent of the power— than a conventional processor restricted to doing correct math.

Bates is not the first to pursue the idea of using hand-wavy hardware to crunch data more efficiently, a notion known as approximate computing (see “10 Breakthrough Technologies 2008: Probabilistic Chips”). But DARPA’s investment in his chip could give the fuzzy math dream its biggest tryout yet.

Bates is building a batch of error-prone computers that each combine 16 of his chips with a single conventional processor. DARPA will get five such machines sometime this summer and plans to put them online for government and academic researchers to play with. The hope is that they can prove the technology’s potential and lure interest from the chip industry.

DARPA funded Singular’s chip as part of a program called Upside, which is aimed at inventing new, more efficient ways to process video footage. Military drones can collect vast quantities of video, but it can’t always be downloaded during flight, and the computer power needed to process it in the air would be too bulky.

It will take notable feats of software and even cultural engineering for imprecise hardware to take off. It’s not easy for programmers used to the idea that chips are always super-precise to adapt to ones that aren’t, says Christian Enz, a professor at the Swiss Federal Institute of Technology in Lausanne who has built his own approximate computing chips. New tools will be needed to help them do that, he says.

But Deb Roy, a professor at the MIT Media Lab and Twitter’s chief media scientist, says that recent trends in computing suggest approximate computing may find a readier audience than ever. “There’s a natural resonance if you are processing any kind of data that is noisy by nature,” he says. That’s become more and more common as programmers look to extract information from photos and video, or have machines make sense of the world and human behavior, says Roy.

Leave a Reply